News

[2025/05] 🎉 I am honored to receive

Qualcomm Innovation Fellowship together with my amazing teammate Junyi Zhang!

[2025/04] 🎉 RoboVerse gets accepted by RSS 2025!

[2025/04] 🎉 RoboVerse is released!

[2024/09] 🎉 Three papers get accepted by CoRL 2024, of which RAM is selected as Oral Presentation .

[2024/07] SAGE🌿 won the Best Paper Award at RSS 2024 SemRob Workshop

[2024/06] I won the Yunfan Award and was named as Rising Star at the World Artificial Intelligence Conference (top 15 early career Chinese AI researchers)! I am the only undergraduate student to win this award so far.

[2024/05] 🎉 SAGE gets accepted to RSS 2024.

[2024/03] I am honored to receive the Berkeley Fellowship Award and Stanford Graduate Fellowship Award.

[2023/12] Excited to announce Simulately🤖, a go-to toolkit for robotics researchers navigating diverse simulators!

[2023/12] I'm honored to be selected as one of the Person of the Year of Peking University.

[2023/10] I gave an Oral Presentation on UniDexGrasp++ at ICCV 2023.

[2023/10] 🎉 UniDexGrasp++ is selected as Best Paper Finalist at ICCV 2023.

[2023/07] 🎉 Two papers get accepted to ICCV 2023 with UniDexGrasp++ receiving final reviews of all strong accepts (the highest ratings).

[2023/07] 🎉 One paper gets accepted to Machine Learning Journal.

[2023/03] 🎉 GAPartNet is selected as a Highlight at CVPR 2023 (Top 10% of accepted papers, top 2.5% of submissions).

[2023/02] 🎉 Three papers get accepted to CVPR 2023 with GAPartNet receiving final reviews of all accepts (the highest ratings).

[2023/01] 🎉 One paper gets accepted to ICRA 2023.

|

Research

My research interest is broadly in Robotics and 3D Computer Vision, with particular

interests in generalizable object perception, understanding and manipulation currently. My research objective

is to build an intelligent agent with the robust and generalizable ability to perceive and interact

with a complex real-world environment.

Representative works are highlighted.

|

|

Large Video Planner Enables Generalizable Robot Control

Boyuan Chen*,

Tianyuan Zhang*,

Haoran Geng*,

Kiwhan Song,

Caiyi Zhang,

Peihao Li,

William T. Freeman,

Jitendra Malik,

Pieter Abbeel,

Russ Tedrake,

Vincent Sitzmann,

Yilun Du

(*equal contribution)

Paper /

Project /

Code /

Bibtex

@misc{chen2025largevideoplanner,

title={Large Video Planner},

author={Boyuan Chen and Tianyuan Zhang and Haoran Geng and Kiwhan Song and William T. Freeman and Jitendra Malik and Russ Tedrake and Vincent Sitzmann and Yilun Du},

year={2025},

eprint={2512.15840},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={http://arxiv.org/abs/2512.15840},

}

ArXiv preprint

LVP is a video foundation model for robotics that generates video plans, which we retarget into executable robot motions. We train an open video model at foundation-model scale for generative robotics planning.

|

|

|

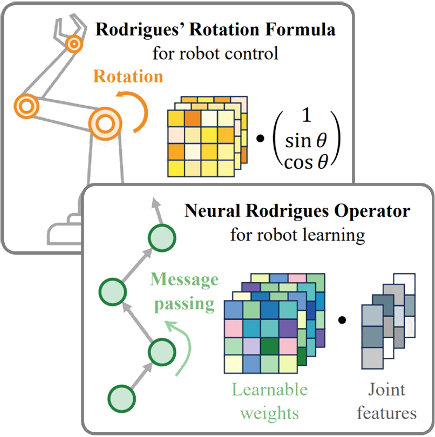

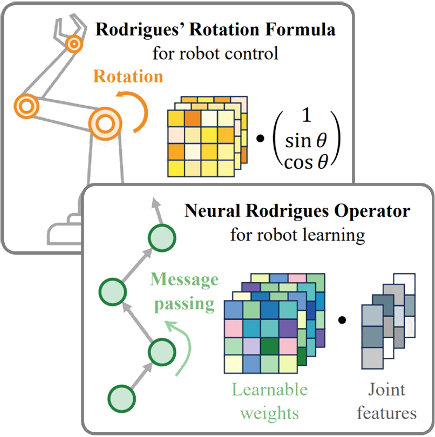

Rodrigues Network for Learning Robot Actions

Jialiang Zhang*,

Haoran Geng*,

Yang You*,

Congyue Deng,

Pieter Abbeel,

Jitendra Malik,

Leonidas Guibas

(*equal contribution)

Paper /

Project /

Bibtex

@misc{zhang2025rodriguesnetworklearningrobot,

title={Rodrigues Network for Learning Robot Actions},

author={Jialiang Zhang and Haoran Geng and Yang You and Congyue Deng and Pieter Abbeel and Jitendra Malik and Leonidas Guibas},

year={2025},

eprint={2506.02618},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2506.02618},

}

ICLR 2026, Oral Presentation

We design the Rodrigues Network (RodriNet), a novel neural architecture specialized for

processing actions.

|

|

|

ViTacFormer: Learning Cross-Modal Representation for Visuo-Tactile Dexterous Manipulation

Haoran Geng*†,

Liang Heng*,

Kaifeng Zhang,

Pieter Abbeel,

Jitendra Malik

(* equal contribution, † project lead)

Paper /

Project /

Bibtex

@misc{heng2025vitacformerlearningcrossmodalrepresentation,

title={ViTacFormer: Learning Cross-Modal Representation for Visuo-Tactile Dexterous Manipulation},

author={Liang Heng and Haoran Geng and Kaifeng Zhang and Pieter Abbeel and Jitendra Malik},

year={2025},

eprint={2506.15953},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2506.15953},

}

ArXiv preprint

We present ViTacFormer, a representation-learning approach that couples a cross-attention encoder to fuse high-resolution vision and touch with an autoregressive tactile prediction head that anticipates future contact signals.

|

|

|

SkillBlender: Towards Versatile Humanoid Whole-Body Loco-Manipulation via Skill Blending

Yuxuan Kuang*,

Haoran Geng*,

Amine Elhafsi,

Tan-Dzung Do,

Pieter Abbeel,

Jitendra Malik,

Marco Pavone,

Yue Wang

(*equal contribution)

Paper /

Project /

Code /

Bibtex

@article{kuang2025skillblender,

title={SkillBlender: Towards Versatile Humanoid Whole-Body Loco-Manipulation via Skill Blending},

author={Kuang, Yuxuan and Geng, Haoran and Elhafsi, Amine and Do, Tan-Dzung and Abbeel, Pieter and Malik, Jitendra and Pavone, Marco and Wang, Yue},

journal={arXiv preprint arXiv:2506.09366},

year={2025},

}

ArXiv preprint

We design the Rodrigues Network (RodriNet), a novel neural architecture specialized for

processing actions.

|

|

|

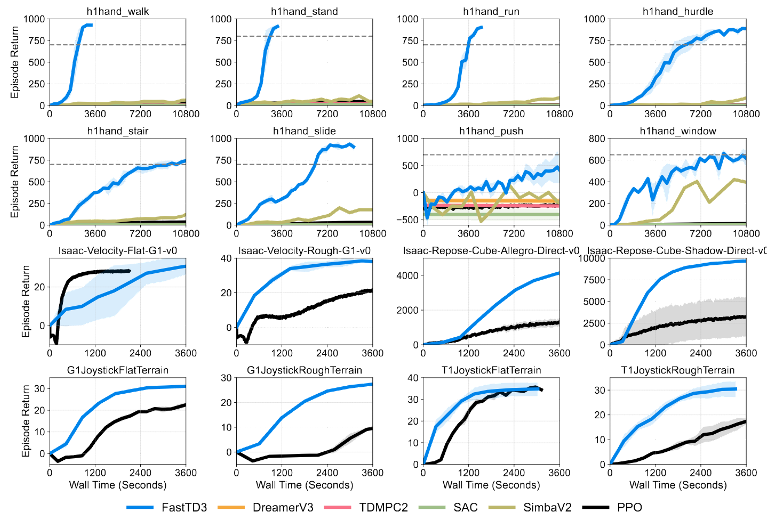

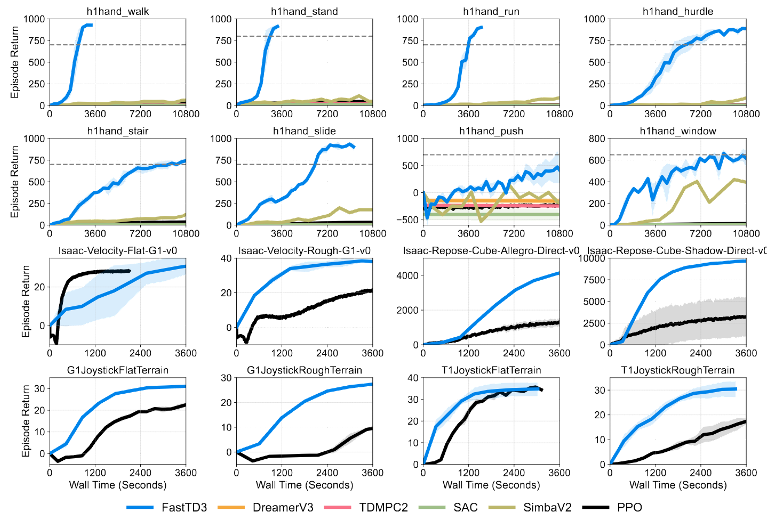

FastTD3: Simple, Fast, and Capable Reinforcement Learning for Humanoid Control

Younggyo Seo,

Carmelo Sferrazza,

Haoran Geng,

Michal Nauman,

Zhao-Heng Yin,

Pieter Abbeel

Paper /

Project /

Bibtex

@misc{seo2025fasttd3simplefastcapable,

title={FastTD3: Simple, Fast, and Capable Reinforcement Learning for Humanoid Control},

author={Younggyo Seo and Carmelo Sferrazza and Haoran Geng and Michal Nauman and Zhao-Heng Yin and Pieter Abbeel},

year={2025},

eprint={2505.22642},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2505.22642},

}

Technical Report, 2025

We introduce FastTD3, a simple, fast, and capable off-policy RL algorithm for humanoid control.

|

|

|

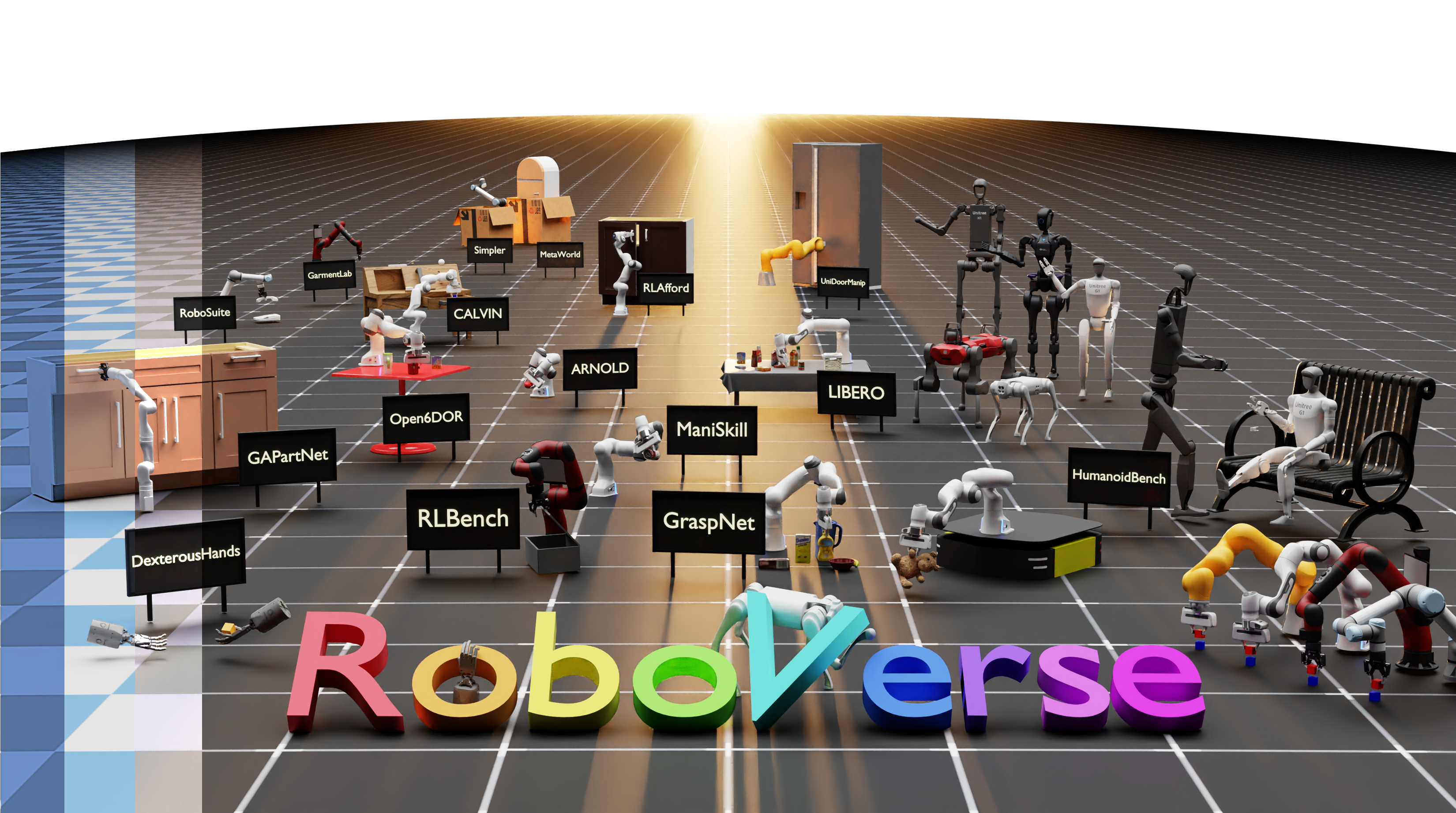

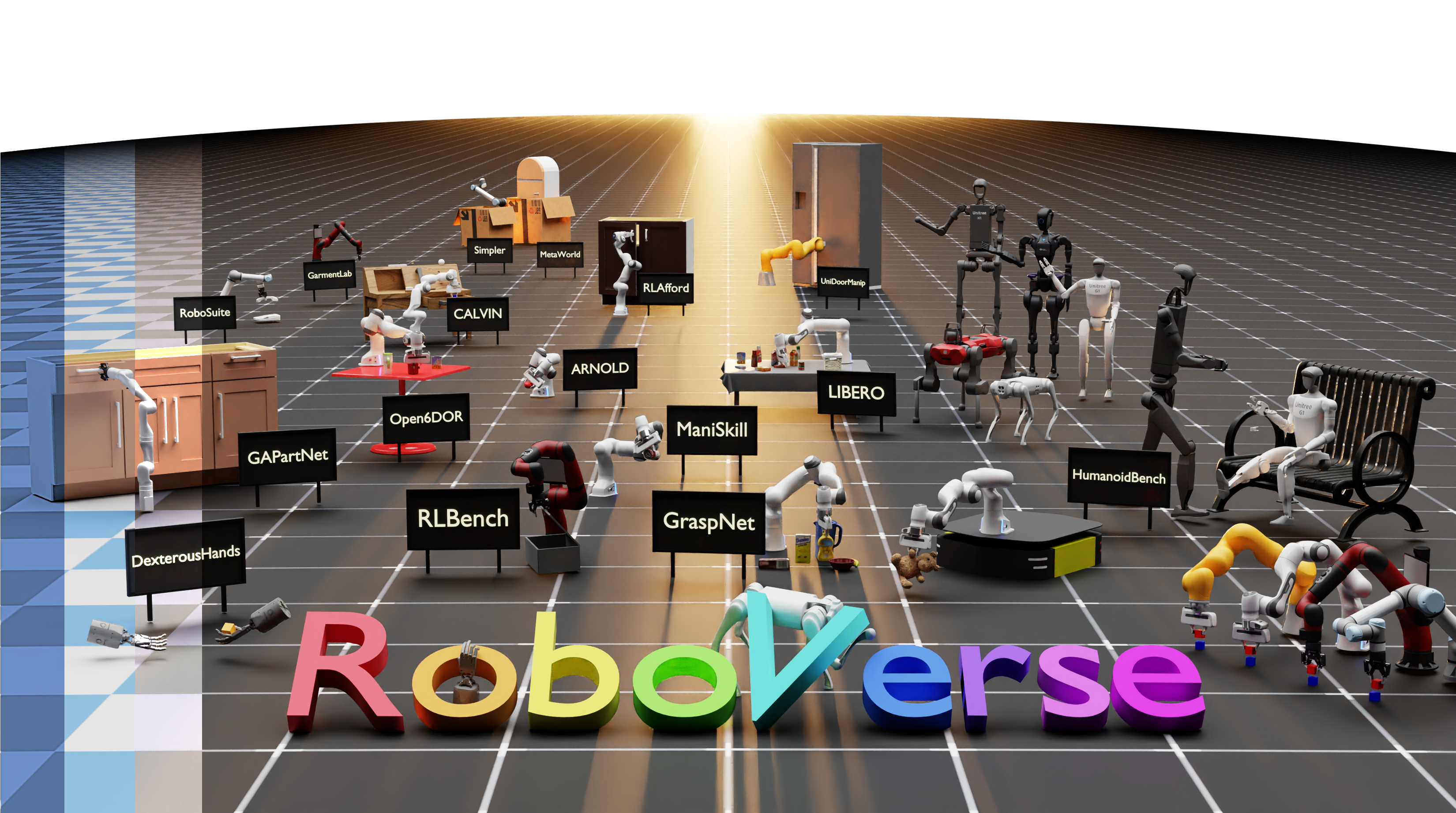

RoboVerse: Towards a Unified Platform, Dataset and Benchmark for Scalable and Generalizable Robot Learning

Haoran Geng*†,

Feishi Wang*,

Songlin Wei*,

Yuyang Li*,

Bangjun Wang*,

Boshi An*,

Charlie Tianyue Cheng*,

Haozhe Lou,

Peihao Li,

Yen-Jen Wang,

Yutong Liang,

Dylan Goetting,

Chaoyi Xu,

Haozhe Chen,

Yuxi Qian,

Yiran Geng,

Jiageng Mao,

Weikang Wan,

Mingtong Zhang,

Jiangran Lyu,

Siheng Zhao,

Jiazhao Zhang,

Jialiang Zhang,

Chengyang Zhao,

Haoran Lu,

Yufei Ding,

Ran Gong,

Yuran Wang,

Yuxuan Kuang,

Ruihai Wu,

Baoxiong Jia,

Carlo Sferrazza,

Hao Dong,

Siyuan Huang†,

Yue Wang†,

Jitendra Malik†,

Pieter Abbeel†

Paper /

Project /

Code /

Bibtex

@misc{geng2025roboverseunifiedplatformdataset,

title={RoboVerse: Towards a Unified Platform, Dataset and Benchmark for Scalable and Generalizable Robot Learning},

author={Haoran Geng and Feishi Wang and Songlin Wei and Yuyang Li and Bangjun Wang and Boshi An and Charlie Tianyue Cheng and Haozhe Lou and Peihao Li and Yen-Jen Wang and Yutong Liang and Dylan Goetting and Chaoyi Xu and Haozhe Chen and Yuxi Qian and Yiran Geng and Jiageng Mao and Weikang Wan and Mingtong Zhang and Jiangran Lyu and Siheng Zhao and Jiazhao Zhang and Jialiang Zhang and Chengyang Zhao and Haoran Lu and Yufei Ding and Ran Gong and Yuran Wang and Yuxuan Kuang and Ruihai Wu and Baoxiong Jia and Carlo Sferrazza and Hao Dong and Siyuan Huang and Yue Wang and Jitendra Malik and Pieter Abbeel},

year={2025},

eprint={2504.18904},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2504.18904},

}

RSS 2025, Oral Presentation

IROS 2025 @ RGMCW & RoboGen, Best Paper Award

We propose RoboVerse, a comprehensive framework for advancing robotics through a simulation platform, synthetic dataset, and unified benchmarks.

|

|

|

PhysPart: Physically Plausible Part Completion for Interactable Objects

Rundong Luo*,

Haoran Geng*,

Congyue Deng,

Puhao Li,

Zan Wang,

Baoxiong Jia,

Leonidas Guibas,

Siyuan Huang†

(*equal contribution)

Paper /

Project /

Code /

Bibtex

@article{Physpart,

title = {PhysPart: Physically Plausible Part Completion for Interactable Objects},

author = {Luo*, Rundong and Geng*, Haoran and Deng, Congyue and Li, Puhao and Wang, Zan and Jia, Baoxiong and Guidbas, Leonidas and Huang, Siyuan},

journal = {International Conference on Robotics and Automation (ICRA)},

year = {2025},

url = {https://arxiv.org/abs/2408.13724},

}

ICRA 2025

PhysPart proposes a diffusion-based part generation model that utilizes geometric conditioning through classifier-free guidance and formulates physical constraints as a set of stability and mobility losses to guide the sampling process.

|

|

|

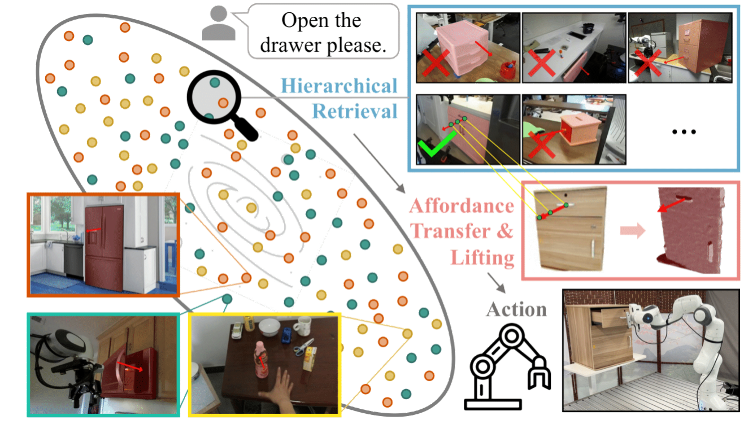

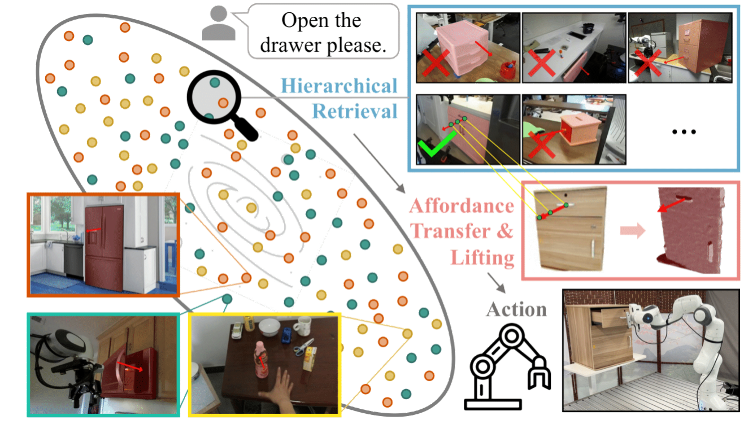

RAM: Retrieval-Based Affordance Transfer for Generalizable Zero-Shot Robotic Manipulation

Yuxuan Kuang*, Junjie Ye*, Haoran Geng*, Jiageng Mao, Congyue Deng, Leonidas Guibas, He Wang, Yue Wang

(*equal contribution)

Paper /

Project /

Code /

Bibtex

@misc{kuang2024ramretrievalbasedaffordancetransfer,

title={RAM: Retrieval-Based Affordance Transfer for Generalizable Zero-Shot Robotic Manipulation},

author={Yuxuan Kuang and Junjie Ye and Haoran Geng and Jiageng Mao and Congyue Deng and Leonidas Guibas and He Wang and Yue Wang},

year={2024},

eprint={2407.04689},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2407.04689},

}

CoRL 2024, Oral Presentation

RAM proposes a retrieve-and-transfer framework for zero-shot robotic manipulation,

featuring generalizability across various objects, environments, and embodiments.

|

|

|

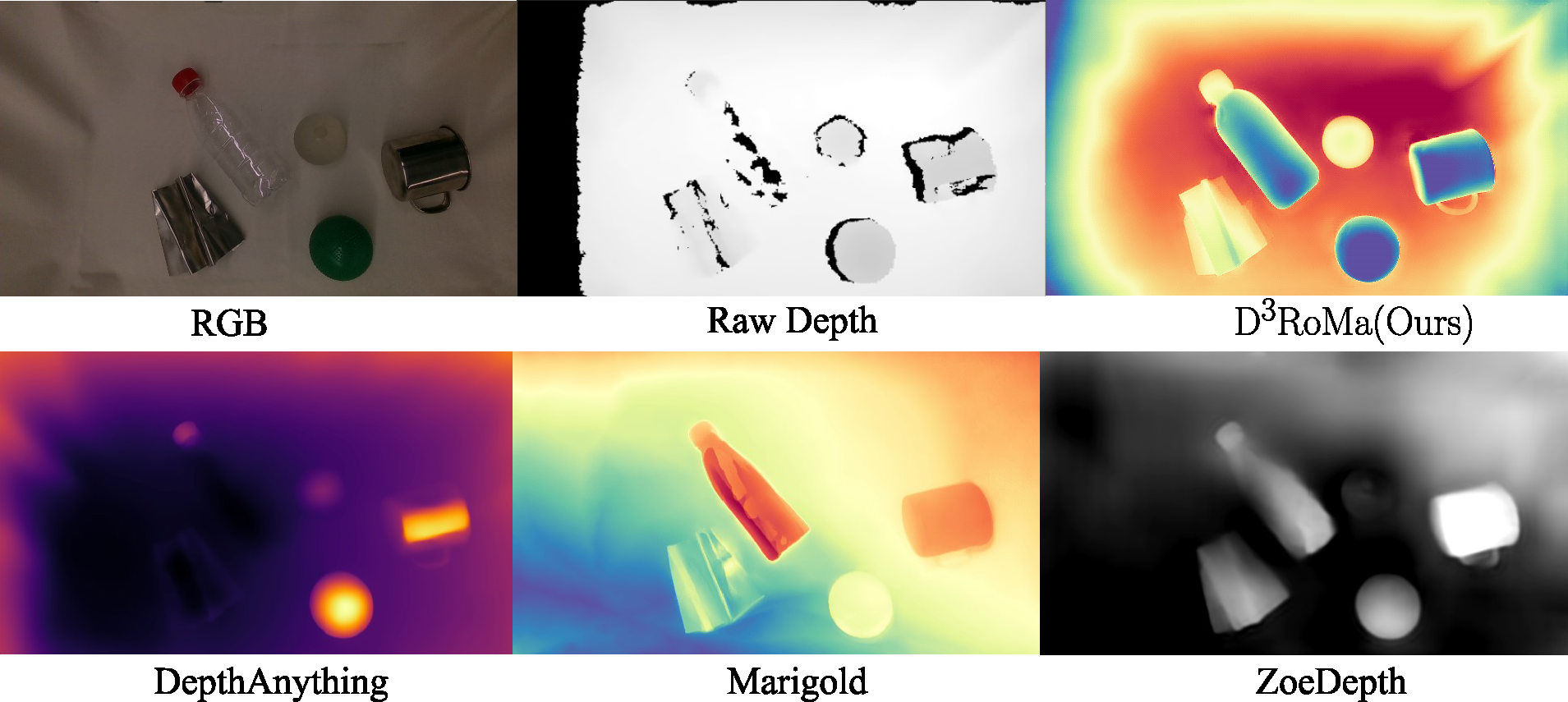

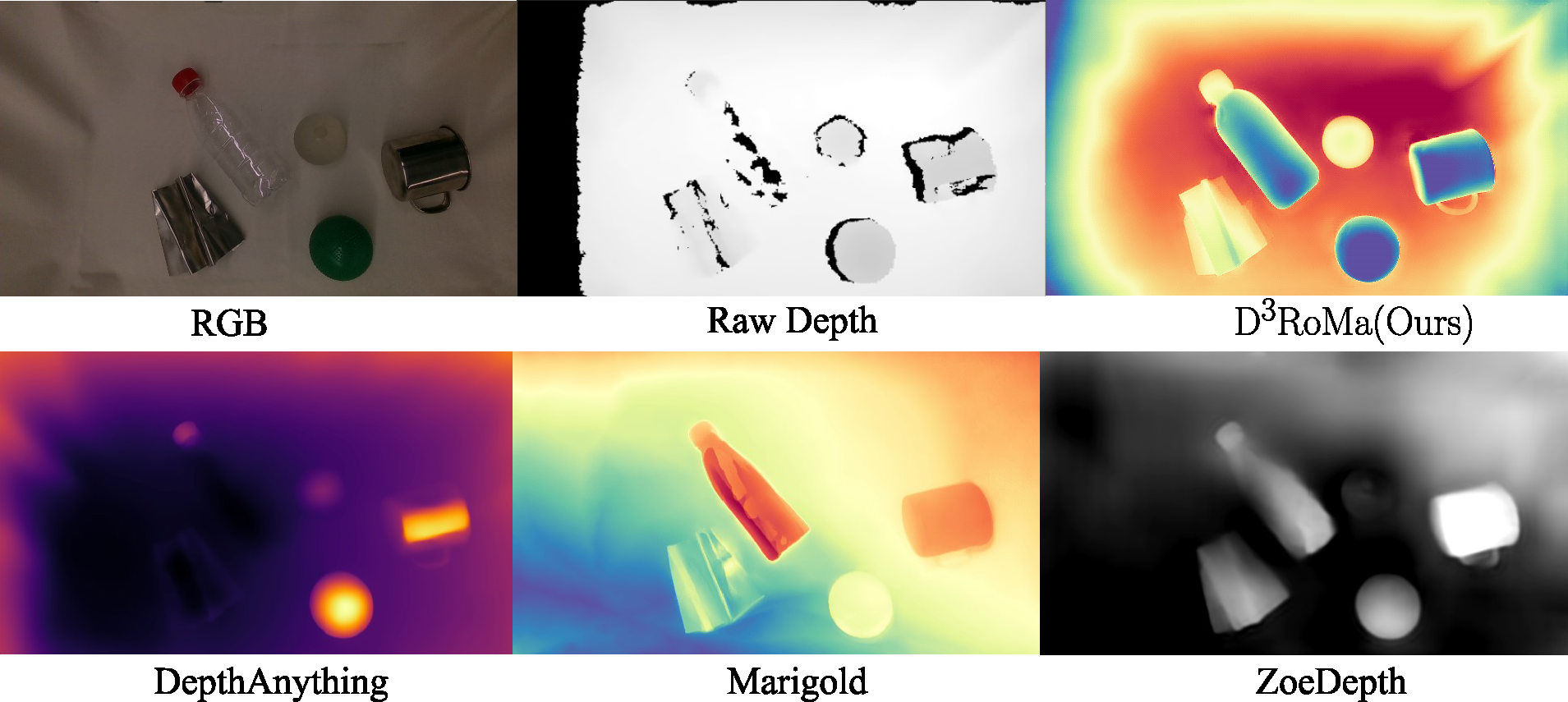

D3RoMa: Disparity Diffusion-based Depth Sensing for Material-Agnostic Robotic Manipulation

Songlin Wei,

Haoran Geng,

Jiayi Chen,

Congyue Deng,

Wenbo Cui,

Chengyang Zhao,

Xiaomeng Fang,

Leonidas Guibas,

He Wang,

Paper /

Project /

Code /

Bibtex

@inproceedings{

wei2024droma,

title={D3RoMa: Disparity Diffusion-based Depth Sensing for Material-Agnostic Robotic Manipulation},

author={Songlin Wei and Haoran Geng and Jiayi Chen and Congyue Deng and Cui Wenbo and Chengyang Zhao and Xiaomeng Fang and Leonidas Guibas and He Wang},

booktitle={8th Annual Conference on Robot Learning},

year={2024},

url={https://openreview.net/forum?id=7E3JAys1xO}

}

CoRL 2024

In this work, we propose D3RoMa, a learning-based depth estimation framework on stereo image pairs that predicts clean and accurate depth in diverse indoor scenes, even in the most challenging scenarios with translucent or specular surfaces

|

|

|

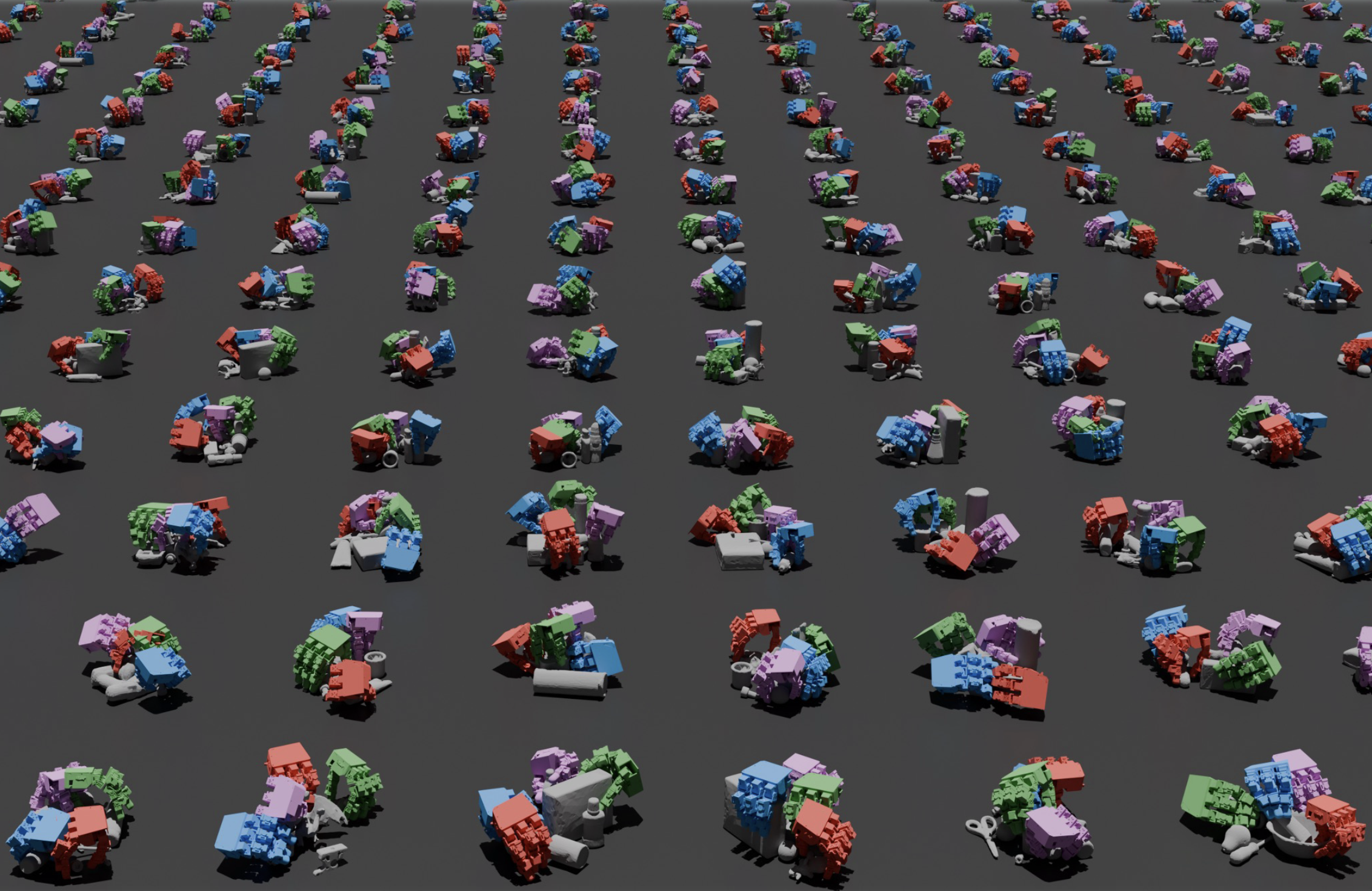

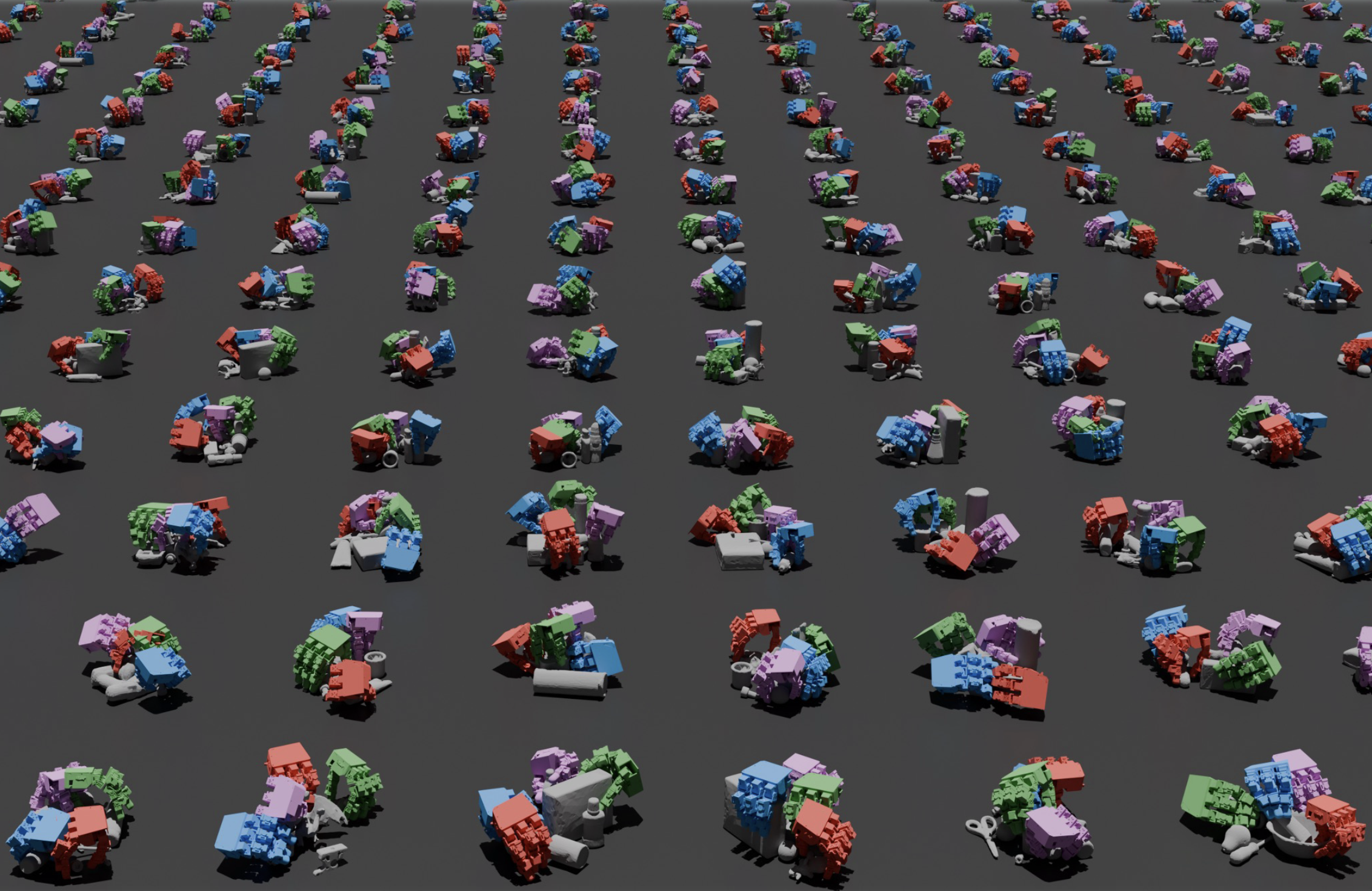

DexGraspNet 2.0: Learning Generative Dexterous Grasping in Large-scale Synthetic Cluttered Scenes

Jialiang Zhang*,

Haoran Liu*,

Danshi Li*,

Xinqiang Yu*,

Haoran Geng,

Yufei Ding,

Jiayi Chen,

He Wang†

(*equal contribution)

Paper /

Project /

Code /

Bibtex

@misc{zhang2024dexgraspnet20learninggenerative,

title={DexGraspNet 2.0: Learning Generative Dexterous Grasping in Large-scale Synthetic Cluttered Scenes},

author={Jialiang Zhang and Haoran Liu and Danshi Li and Xinqiang Yu and Haoran Geng and Yufei Ding and Jiayi Chen and He Wang},

year={2024},

eprint={2410.23004},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2410.23004},

}

CoRL 2024

We synthesized a large-scale dexterous grasping dataset in cluttered scenes and designed a generative framework to learn grasping in the real world.

|

|

|

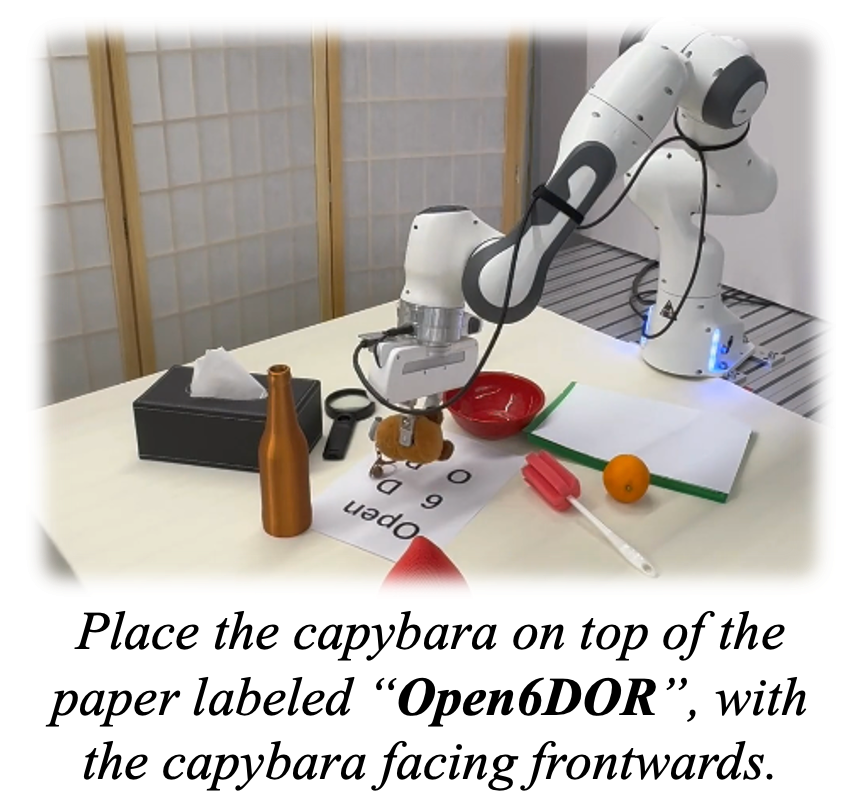

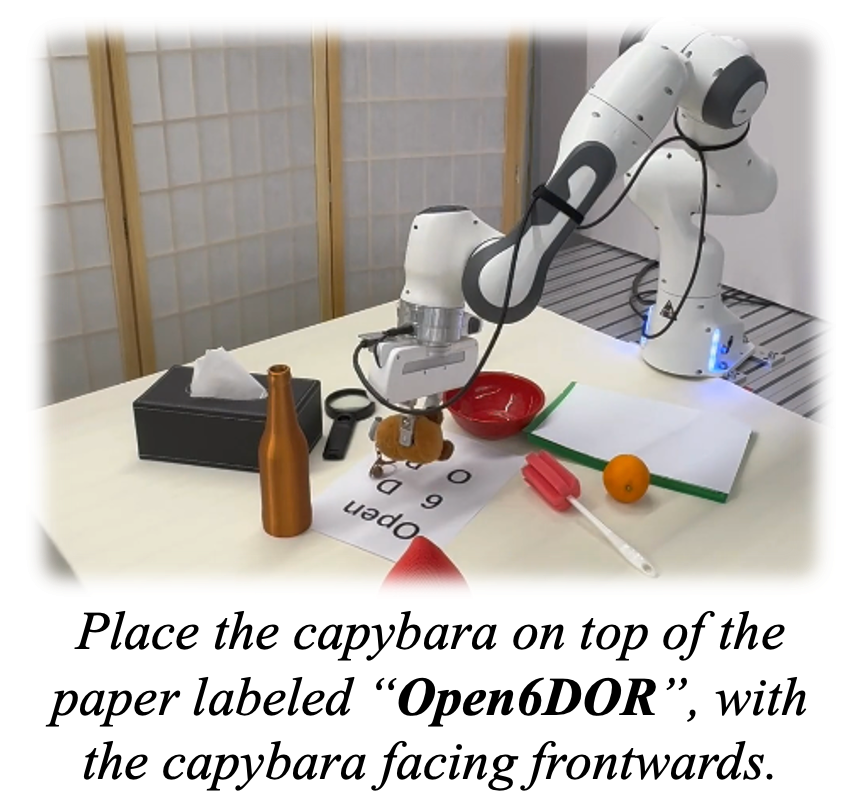

Open6DOR: Benchmarking Open-instruction 6-DoF Object Rearrangement and A VLM-based Approach

Yufei Ding*,

Haoran Geng*,

Chaoyi Xu,

Xiaomeng Fang,

Jiazhao Zhang,

Songlin Wei,

Qiyu Dai,

Zhizheng Zhang,

He Wang†

Paper

/

Project Page

/

Code

/

Video

/

Bibtex

@INPROCEEDINGS{10802733,

author={Ding, Yufei and Geng, Haoran and Xu, Chaoyi and Fang, Xiaomeng and Zhang, Jiazhao and Wei, Songlin and Dai, Qiyu and Zhang, Zhizheng and Wang, He},

booktitle={2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Open6DOR: Benchmarking Open-instruction 6-DoF Object Rearrangement and A VLM-based Approach},

year={2024},

volume={},

number={},

pages={7359-7366},

keywords={Three-dimensional displays;Benchmark testing;Propulsion;6-DOF;Real-time systems;Artificial intelligence;Intelligent robots;Synthetic data},

doi={10.1109/IROS58592.2024.10802733}

}

IROS 2024, Oral Presentation

We present Open6DOR, a challenging and comprehensive benchmark for open-instruction 6-DoF

object rearrangement tasks. Following this, we propose a zero-shot and robust method,

Open6DORGPT, which proves effective in demanding simulation environments and real-world scenarios.

|

|

|

Simulately: Handy information and resources for physics simulators for robot learning research.

Haoran Geng

Yuyang Li,

Yuzhe Qin,

Ran Gong,

Wensi Ai,

Yuanpei Chen,

Puhao Li,

Junfeng Ni,

Zhou Xian,

Songlin Wei,

Yang You,

Yufei Ding,

Jialiang Zhang

Website

/

Github

Open-source Project

Selected into CMU 16-831

Simulately is a project where we gather useful information of robotics & physics simulators for cutting-edge robot learning research.

|

|

|

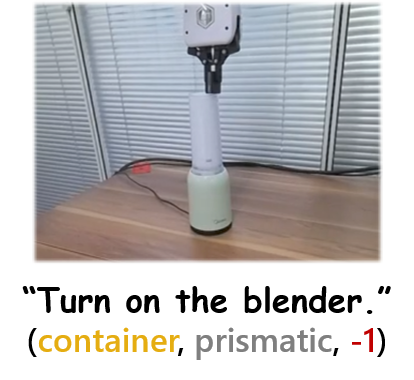

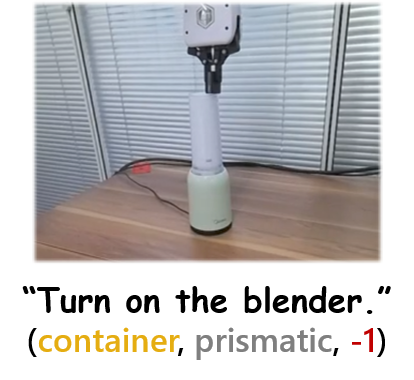

SAGE🌿: Bridging Semantic and Actionable Parts for Generalizable Articulated-Object Manipulation under Language Instructions

Haoran Geng*,

Songlin Wei*,

Congyue Deng,

Bokui Shen,

He Wang†,

Leonidas Guibas†

ArXiv

/

Project Page

/

Video

/

Bibtex

@misc{geng2023sage,

title={SAGE: Bridging Semantic and Actionable Parts for GEneralizable Articulated-Object Manipulation under Language Instructions},

author={Haoran Geng and Songlin Wei and Congyue Deng and Bokui Shen and He Wang and Leonidas Guibas},

year={2023},

eprint={2312.01307},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

RSS 2024, Oral Presentation

RSS 2024 @ SemRob, Best Paper Award

We present SAGE, a framework bridging the understanding of semantic and actionable parts

for generalizable manipulation of articulated objects using Large Language Models(LLMs)

and Visual-Language Models(VLMs).

|

|

|

Ag2Manip: Learning Novel Manipulation Skills with Agent-Agnostic Visual and Action Representations

Puhao Li*,

Tengyu Liu*,

Yuyang Li,

Muzhi Han,

Haoran Geng,

Shu Wang,

Yixin Zhu,

Song-Chun Zhu,

Siyuan Huang

Paper/

Code/

Project Page

Bibtex

@misc{li2024ag2maniplearningnovelmanipulation,

title={Ag2Manip: Learning Novel Manipulation Skills with Agent-Agnostic Visual and Action Representations},

author={Puhao Li and Tengyu Liu and Yuyang Li and Muzhi Han and Haoran Geng and Shu Wang and Yixin Zhu and Song-Chun Zhu and Siyuan Huang},

year={2024},

eprint={2404.17521},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2404.17521},

}

IROS 2024, Oral Presentation

We introduce Ag2Manip, which enables various robotic manipulation tasks without any domain-specific demonstrations. Ag2Manip also supports robust imitation learning of manipulation skills in the real world.

|

|

|

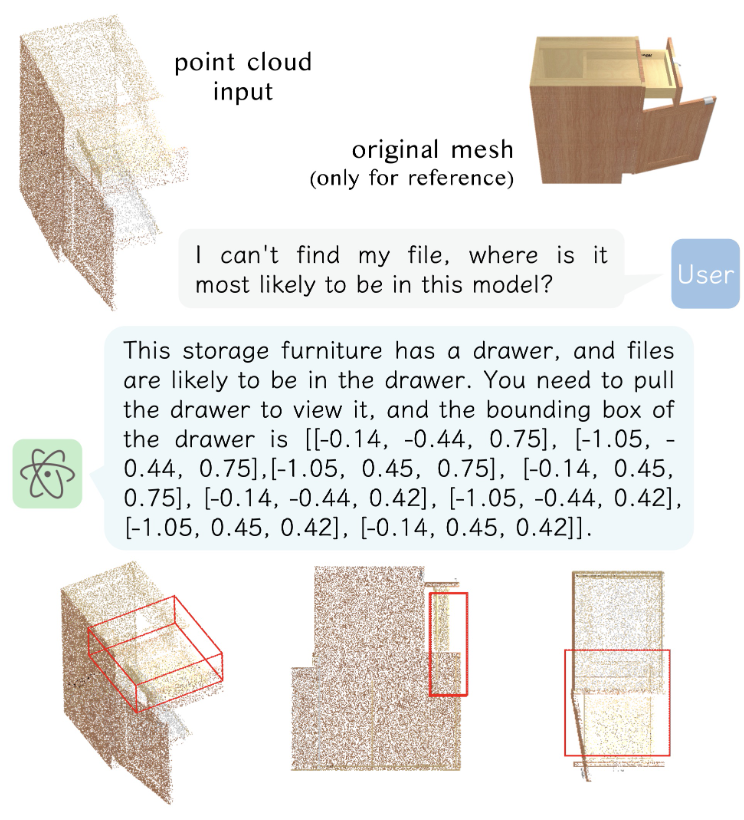

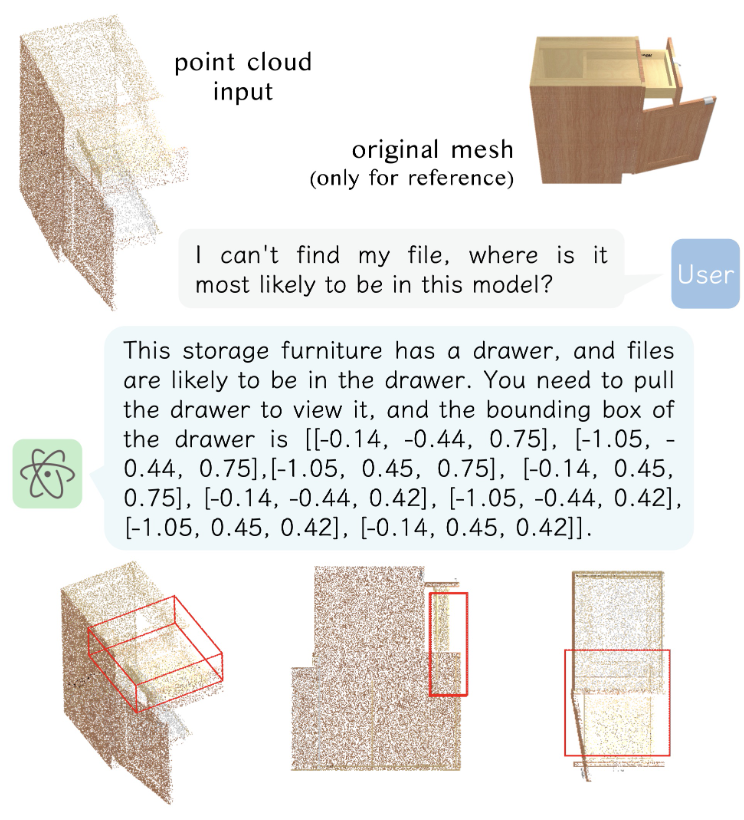

ShapeLLM: Universal 3D Object Understanding for Embodied Interaction

Zekun Qi,

Runpei Dong,

Shaochen Zhang,

Haoran Geng,

Chunrui Han,

Zheng Ge,

He Wang,

Li Yi,

Kaisheng Ma

ArXiv

/

Project Page

/

Bibtex

@article{shapellm24,

author = {Zekun Qi and Runpei Dong and Shaochen Zhang and Haoran Geng and Chunrui Han and Zheng Ge and He Wang and Li Yi and Kaisheng Ma},

title = {ShapeLLM: Universal 3D Object Understanding for Embodied Interaction},

journal = {CoRR},

volume = {abs/2402.17766},

year = {2024},

eprinttype = {arXiv},

eprint = {2402.17766},

}

ECCV 2024

We present ShapeLLM, the first 3D Multimodal Large Language Model (LLM) designed for embodied interaction, exploring a universal 3D object understanding with 3D point clouds and languages.

|

|

|

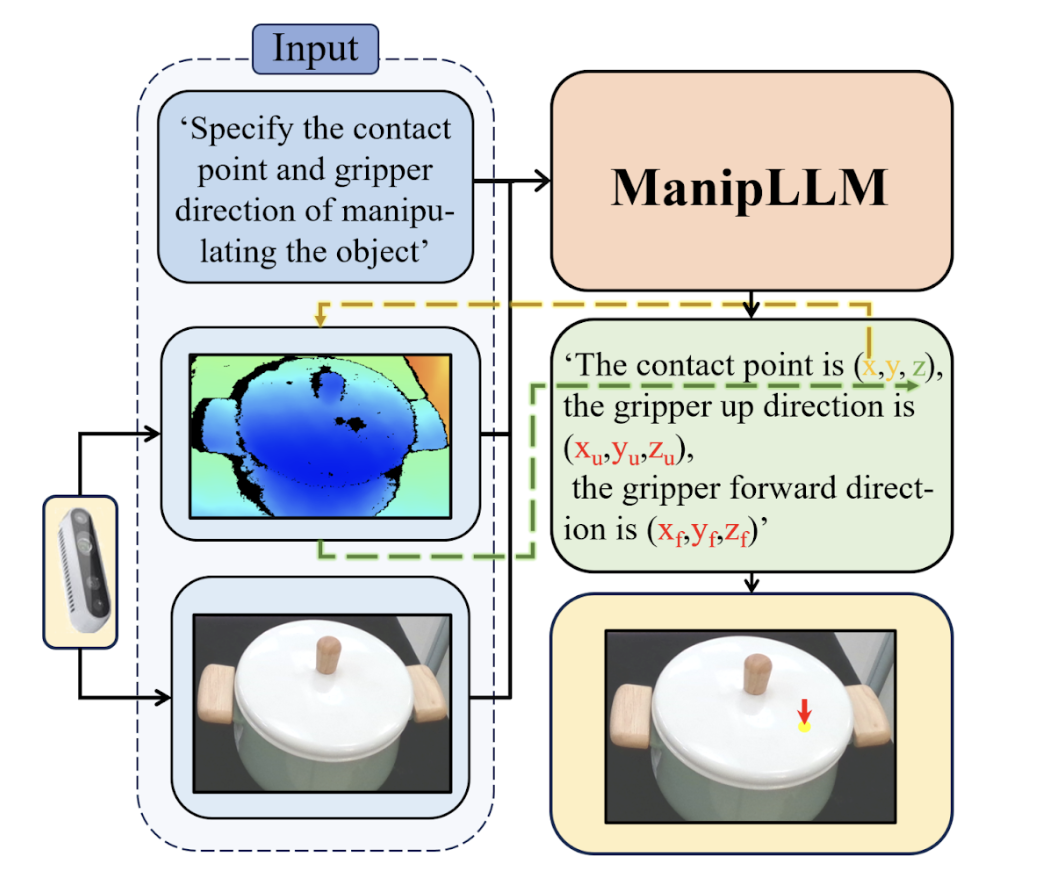

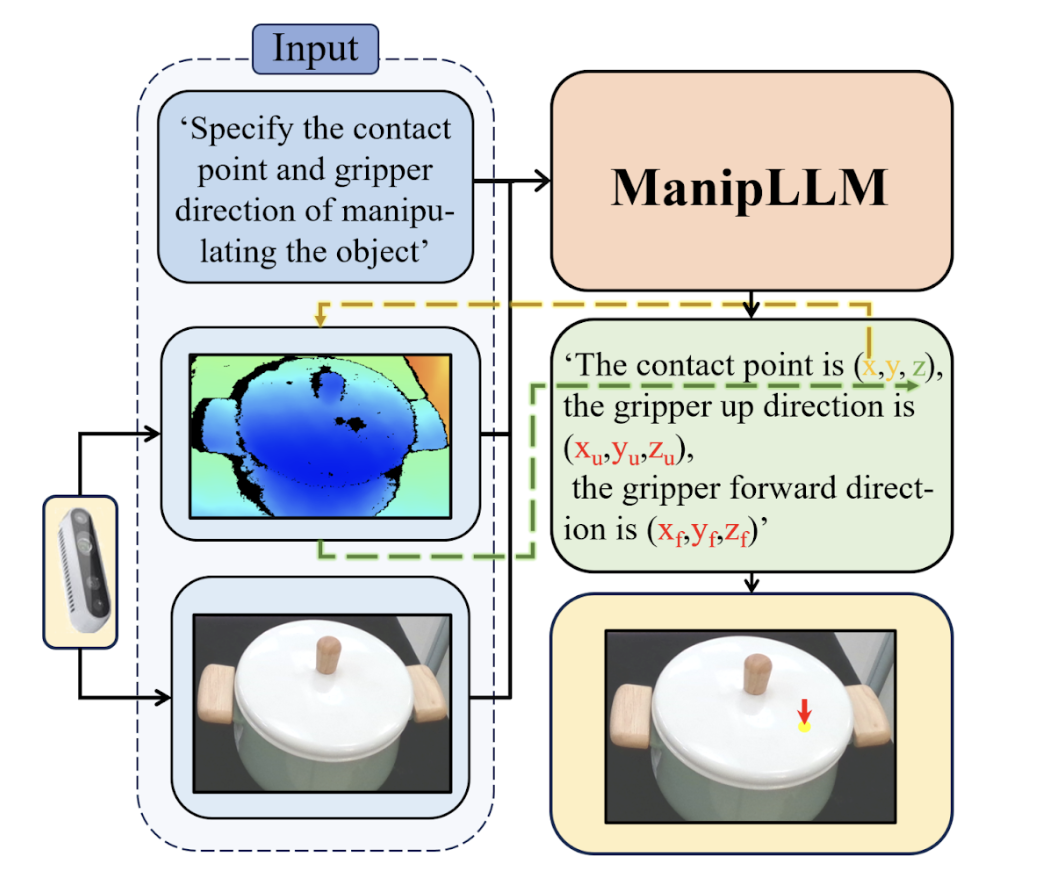

ManipLLM:Embodied Multimodal Large Language Model for Object-Centric Robotic Manipulation

Xiaoqi Li,

Mingxu Zhang,

Yiran Geng,

Haoran Geng,

Yuxing Long,

Yan Shen,

Renrui Zhang,

Jiaming Liu,

Hao Dong†

ArXiv

/

Project Page

/

Bibtex

@misc{li2023manipllm,

title={ManipLLM: Embodied Multimodal Large Language Model for Object-Centric Robotic Manipulation},

author={Xiaoqi Li and Mingxu Zhang and Yiran Geng and Haoran Geng and Yuxing Long and Yan Shen and Renrui Zhang and Jiaming Liu and Hao Dong},

year={2023},

eprint={2312.16217},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

CVPR 2024

We present ManipLLM, introducing an innovative approach for robot manipulation that leverages the robust

reasoning capabilities of Multimodal Large Language Models (MLLMs) to enhance the stability

and generalization of manipulation.

|

|

|

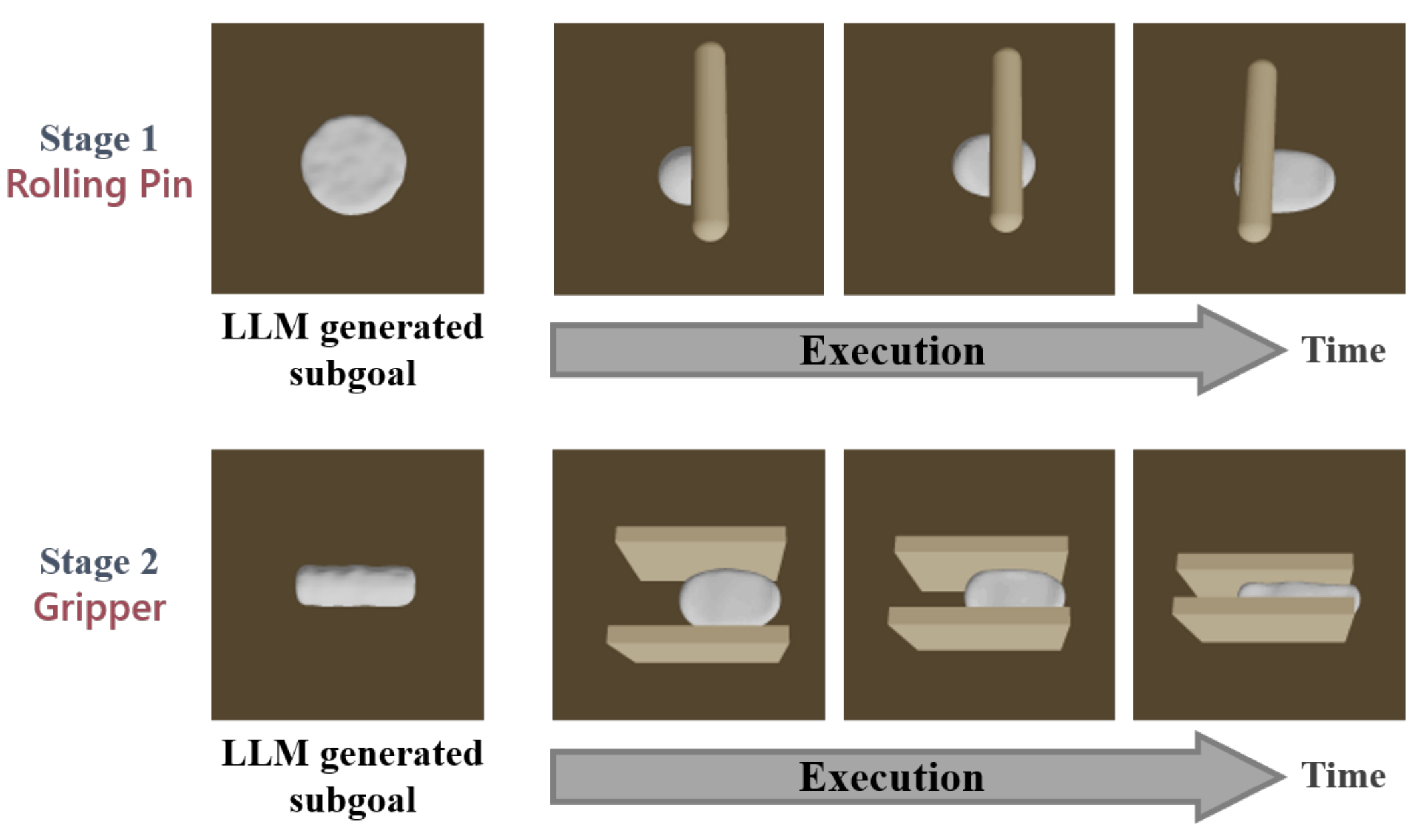

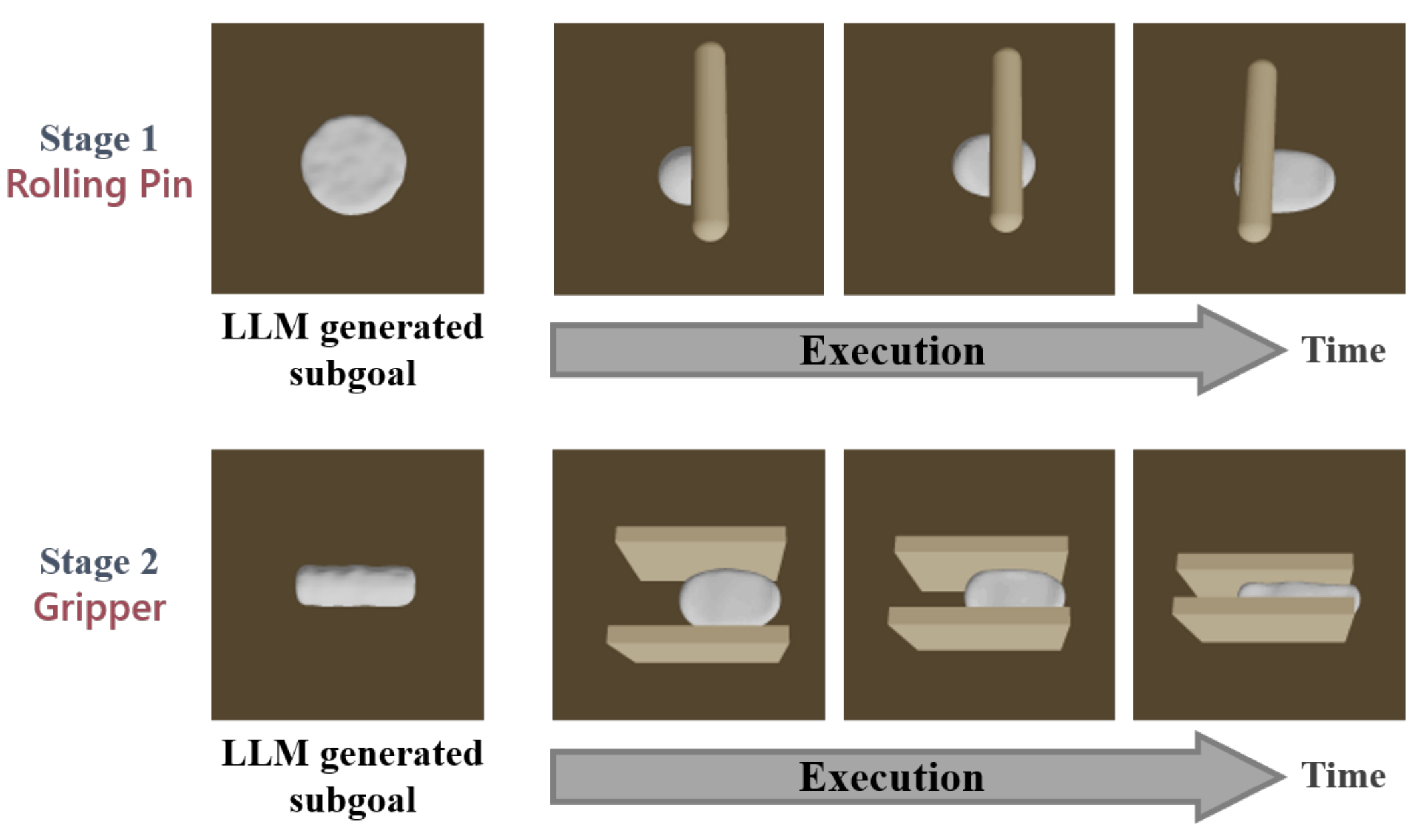

Make a Donut🍩: Language-guided Hierarchical EMD-Space Planning for Zero-shot Deformable Object Manipulation

Yang You,

Bokui Shen,

Congyue Deng,

Haoran Geng,

He Wang,

Leonidas Guibas†

ArXiv

/

Project Page

/

Bibtex

@misc{

you2023make,

title={Make a Donut: Language-Guided Hierarchical EMD-Space Planning for Zero-shot Deformable Object Manipulation},

author={Yang You and Bokui Shen and Congyue Deng and Haoran Geng and He Wang and Leonidas Guibas},

year={2023},

eprint={2311.02787},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

RA-L

In this work, we introduce a demonstration-free hierarchical planning approach capable of tackling intricate

long-horizon tasks without necessitating any training

|

|

|

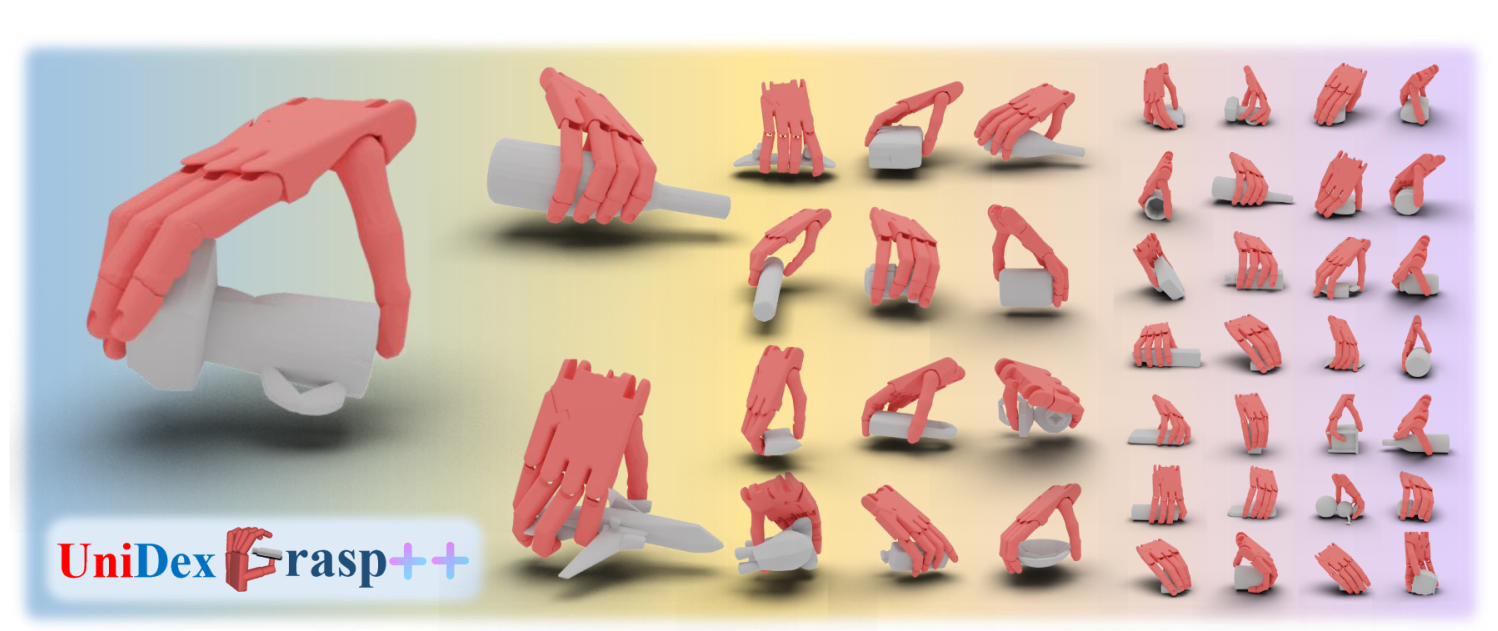

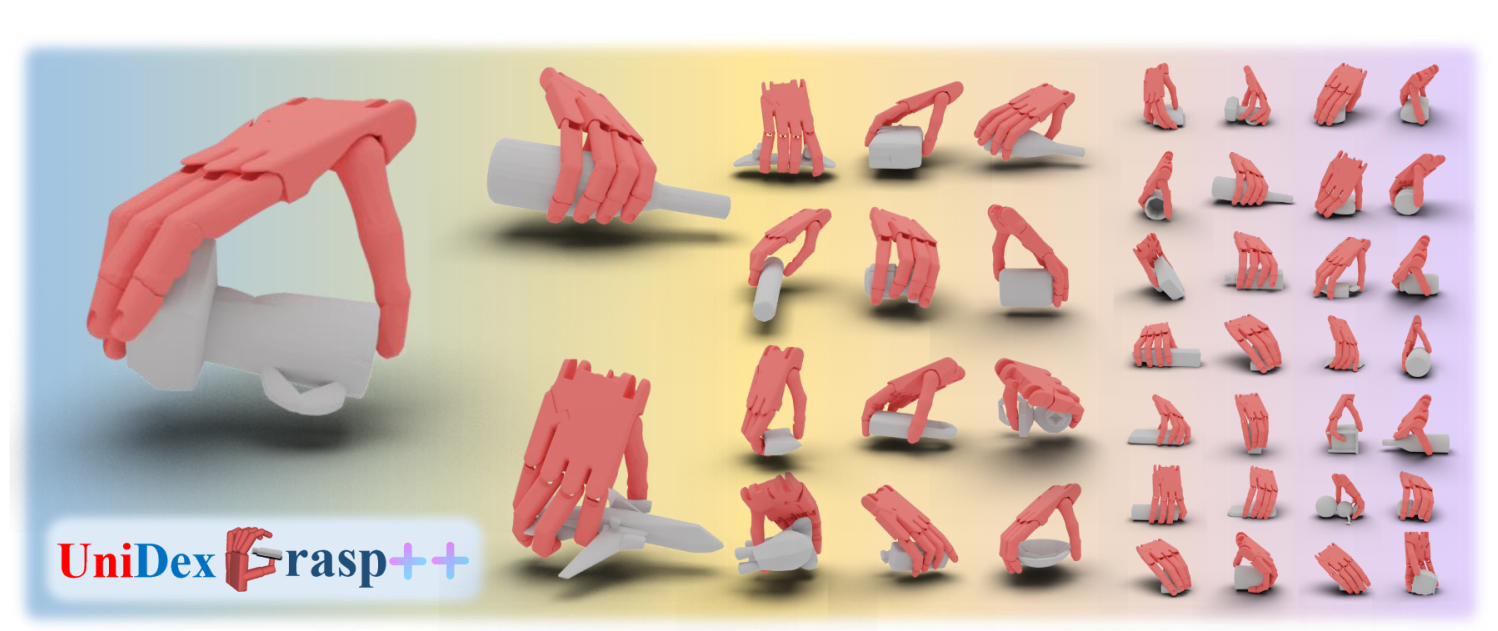

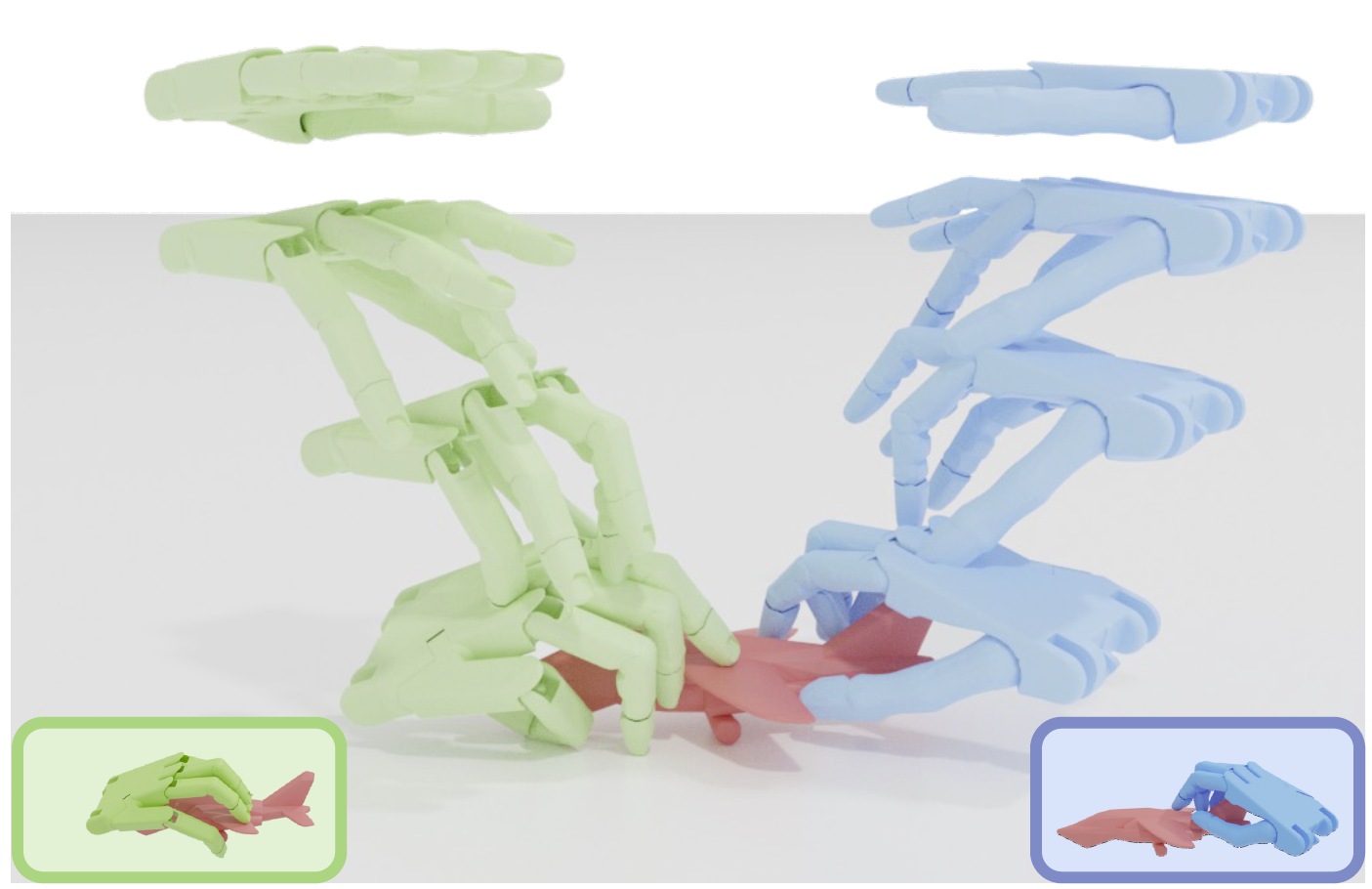

UniDexGrasp++: Improving Dexterous Grasping Policy Learning via Geometry-aware Curriculum and Iterative Generalist-Specialist Learning

Weikang Wan*,

Haoran Geng*,

Yun Liu,

Zikang Shan,

Yaodong Yang,

Li Yi,

He Wang†

(*equal contribution)

ArXiv

/

Project Page

/

Code

/

Media(CFCS)

/

Bibtex

@article{wan2023unidexgrasp++,

title={UniDexGrasp++: Improving Dexterous Grasping Policy Learning via Geometry-aware Curriculum and Iterative Generalist-Specialist Learning},

author={Wan, Weikang and Geng, Haoran and Liu, Yun and Shan, Zikang and Yang, Yaodong and Yi, Li and Wang, He},

journal={arXiv preprint arXiv:2304.00464},

year={2023}

}

ICCV 2023, Oral Presentation with all top ratings (strong accept)

ICCV 2023, Best Paper Finalist

We propose a novel, object-agnostic method for learning a universal policy for dexterous object

grasping from realistic point cloud observations and proprioceptive information under a table-top setting.

|

|

|

ARNOLD: A Benchmark for Language-Grounded Task Learning With Continuous States in Realistic 3D Scenes

Ran Gong*,

Jiangyong Huang*,

Yizhou Zhao,

Haoran Geng,

Xiaofeng Gao,

Qingyang Wu,

Wensi Ai,

Ziheng Zhou,

Demetri Terzopoulos,

Song-Chun Zhu,

Baoxiong Jia,

Siyuan Huang,

ArXiv

/

Project Page

/

Code

/

Bibtex

@article{gong2023arnold,

title={ARNOLD: A Benchmark for Language-Grounded Task Learning With Continuous States in Realistic 3D Scenes},

author={Gong, Ran and Huang, Jiangyong and Zhao, Yizhou and Geng, Haoran and Gao, Xiaofeng and Wu, Qingyang and Ai, Wensi and Zhou, Ziheng and Terzopoulos, Demetri and Zhu, Song-Chun and Jia, Baoxiong and Huang, Siyuan},

journal={arXiv preprint arXiv:2304.04321},

year={2023}

}

ICCV 2023

CoRL 2022 @ LangRob, Spotlight Presentation

We present ARNOLD, a benchmark that evaluates language-grounded task learning with continuous states in realistic 3D scenes.

|

|

|

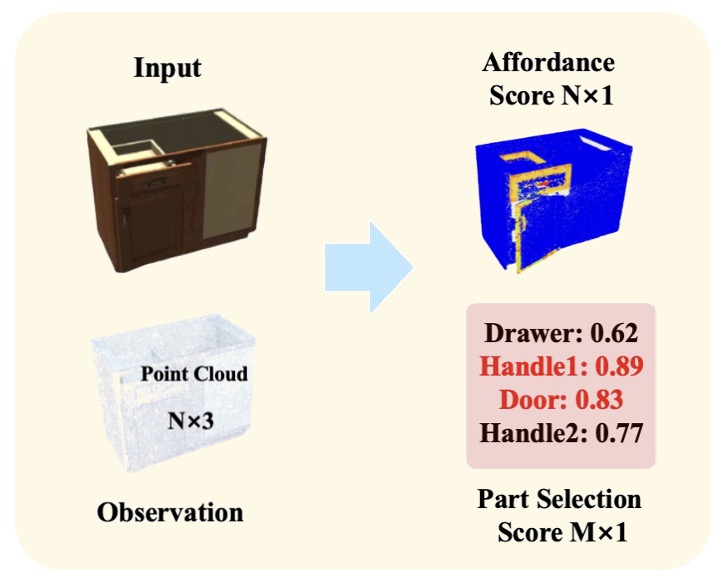

GAPartNet: Cross-Category Domain-Generalizable Object Perception and Manipulation via Generalizable and Actionable Parts

Haoran Geng*,

Helin Xu*,

Chengyang Zhao*,

Chao Xu,

Li Yi,

Siyuan Huang,

He Wang†

ArXiv

/

Project Page

/

Code

/

Dataset

/

Poster

/

CVPR Page

/

Media(CFCS)

/

Bibtex

@article{geng2022gapartnet,

title={GAPartNet: Cross-Category Domain-Generalizable Object Perception and Manipulation via Generalizable and Actionable Parts},

author={Geng, Haoran and Xu, Helin and Zhao, Chengyang and Xu, Chao and Yi, Li and Huang, Siyuan and Wang, He},

journal={arXiv preprint arXiv:2211.05272},

year={2022}

}

CVPR 2023, Highlight (Top 2.5% of submissions) with all top ratings

We propose to learn cross-category generalizable object perception and manipulation skills via Generalizable

and Actionable Parts(GAPart), and present GAPartNet, a large-scale interactive dataset with rich part annotations.

|

|

|

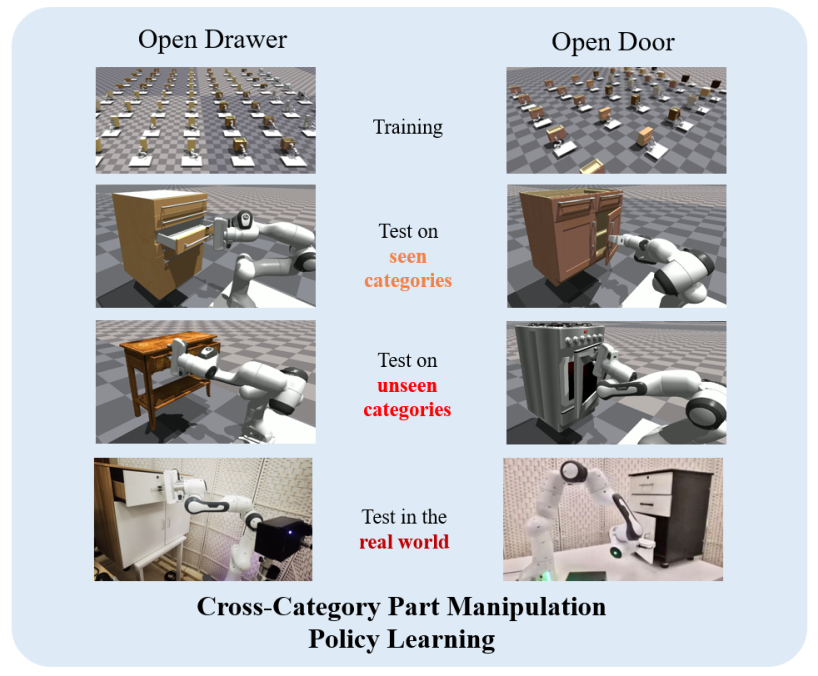

PartManip: Learning Cross-Category Generalizable Part Manipulation Policy

from Point Cloud Observations

Haoran Geng*,

Ziming Li*,

Yiran Geng,

Jiayi Chen,

Hao Dong,

He Wang†

ArXiv

/

Project Page

/

Code

/

Dataset

/

Poster

/

CVPR Page

/

Bibtex

@article{geng2023partmanip,

title={PartManip: Learning Cross-Category Generalizable Part Manipulation Policy from Point Cloud Observations},

author={Geng, Haoran and Li, Ziming and Geng, Yiran and Chen, Jiayi and Dong, Hao and Wang, He},

journal={arXiv preprint arXiv:2303.16958},

year={2023}

}

CVPR 2023

We introduce a large-scale, cross-category part-based object manipulation benchmark

with tasks in realistic, vision-based settings and design a novel augmented state-to-vision

distillation method for these challenging tasks.

|

|

|

UniDexGrasp: Universal Robotic Dexterous Grasping via Learning Diverse Proposal Generation and Goal-Conditioned Policy

Yinzhen Xu*,

Weikang Wan*,

Jialiang Zhang*,

Haoran Liu*,

Zikang Shan,

Hao Shen,

Ruicheng Wang,

Haoran Geng,

Yijia Weng,

Jiayi Chen,

Tengyu Liu,

Li Yi,

He Wang†

ArXiv

/

Project Page

/

Code

/

CVPR Page

/

Bibtex

@article{xu2023unidexgrasp,

title={UniDexGrasp: Universal Robotic Dexterous Grasping via Learning Diverse Proposal Generation and Goal-Conditioned Policy},

author={Xu, Yinzhen and Wan, Weikang and Zhang, Jialiang and Liu, Haoran and Shan, Zikang and Shen, Hao and Wang, Ruicheng and Geng, Haoran and Weng, Yijia and Chen, Jiayi and others},

journal={arXiv preprint arXiv:2303.00938},

year={2023}

}

CVPR 2023

We tackle the problem of learning universal robotic dexterous grasping from a

point cloud observation under a table-top setting.

|

|

|

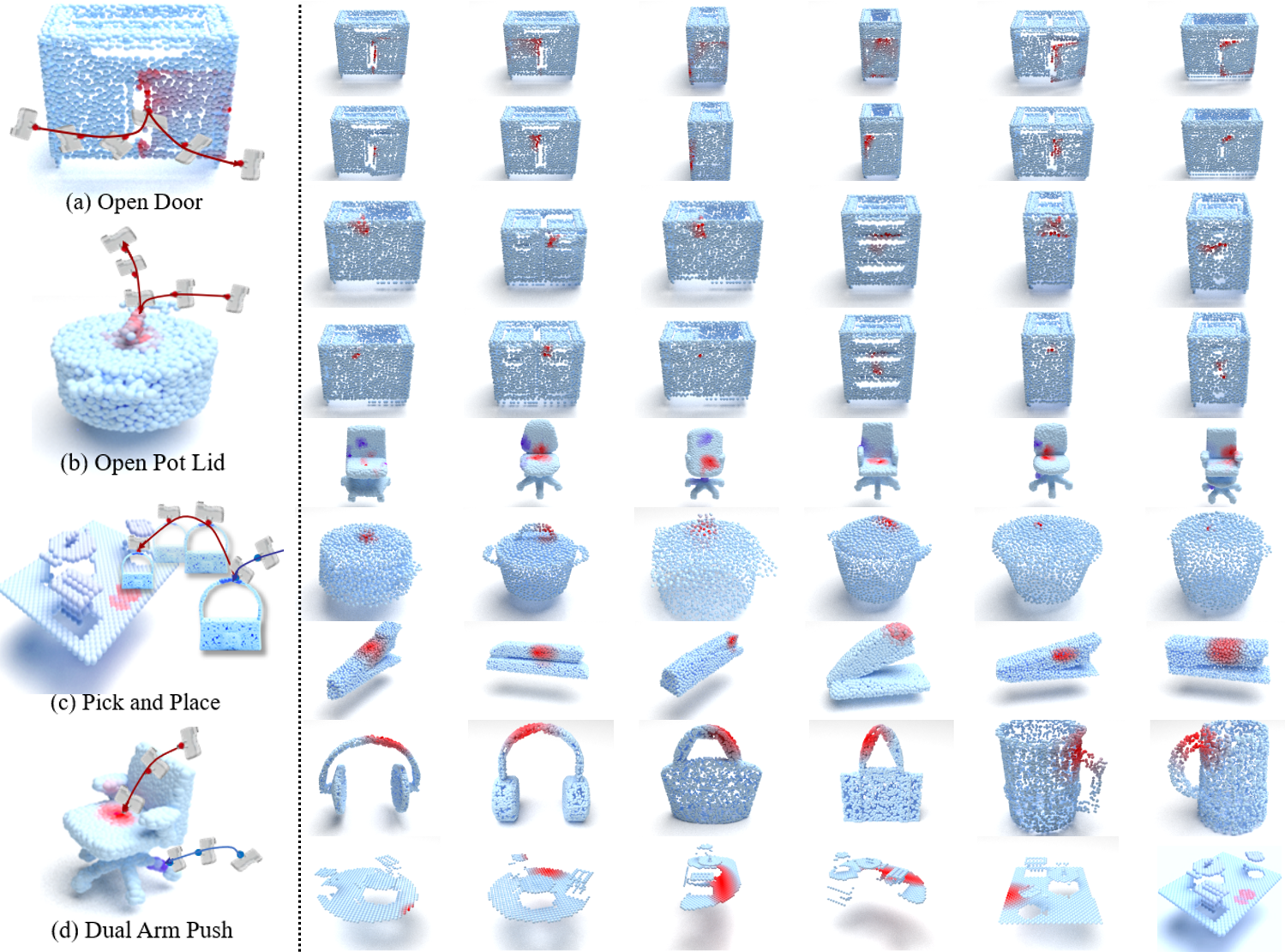

Learning Part-Aware Visual Actionable Affordance for 3D Articulated Object Manipulation

Yuanchen Ju*,

Haoran Geng*,

Ming Yang*,

Yiran Geng,

Yaroslav Ponomarenko,

Taewhan Kim,

He Wang,

Hao Dong†

Paper

/

Video

/

Workshop

@article{geng2023partmanip,

title={PartManip: Learning Cross-Category Generalizable Part Manipulation Policy from Point Cloud Observations},

author={Geng, Haoran and Li, Ziming and Geng, Yiran and Chen, Jiayi and Dong, Hao and Wang, He},

journal={arXiv preprint arXiv:2303.16958},

year={2023}

}

CVPR 2023 @ 3DVR, Spotlight Presentation

We introduces Part-aware Affordance Learning methods. Our approach first learns a part

prior, subsequently generating an affordance map. We further enhance precision by

introducing a part-level scoring system, designed to identify the best part for manipulation.

|

|

|

RLAfford: End-to-End Affordance Learning for Robotic Manipulation

Yiran Geng*,

Boshi An*,

Haoran Geng,

Yuanpei Chen,

Yaodong Yang†,

Hao Dong†

ArXiv

/

Project Page

/

Video

/

Code

/

Media (CFCS)

/

Bibtex

@article{geng2022end,

title={End-to-End Affordance Learning for Robotic Manipulation},

author={Geng, Yiran and An, Boshi and Geng, Haoran and Chen, Yuanpei and Yang, Yaodong and Dong, Hao},

journal={International Conference on Robotics and Automation (ICRA)},

year={2023}

}

ICRA 2023

In this study, we take advantage of visual affordance by using the contact information generated during the RL training process to predict contact maps of interest.

|

Selected Awards and Honors

2025: Best Paper Award Finalist, CoRL 2025

2025: Best Open Source Award, IROS 2025 @ RoboGen

2025: Best Paper Award, IROS 2025 @ RGMCW

2025: Qualcomm Innovation Fellowship (the only Berkeley winner team this year), Qualcomm

2024: Best Paper Award, RSS 2024 @ SemRob

2024: Yunfan Award for Rising Star (the only undergraduate student to win this award so far), WAIC

2024: Stanford Graduate Fellowship Award, Stanford

2024: Berkeley Fellowship Award, UC Berkeley

2024: Best Graduation Thesis Award, EECS, Peking University

2024: Outstanding Graduation Thesis Scholarship, Peking University

2024: Top10 Graduation Thesis (ranking 1st), EECS, Peking University

2024: Outstanding Graduates of Beijing

2024: Outstanding Graduates of Peking University, Peking University

2023: ICCV Best Paper Award (Marr Prize) Finalist

2023: Person of the Year (10 people/year), Peking University

2023: Research Rising Star Award (First Price), BIGAI

2023: Outstanding Overseas Exchange Scholarship

2023: Academic Innovation Award of Peking University

2023: May Fourth Scholarship (Highest-level Scholarship for Peking University, 125/65k+)

2021-2023: Merit Student of Peking University

2023: Turing Student Research Forum: Best Presentation Award & Best Poster Award

2023: School of EECS Research Exhibition: Best Presentation Award

2022: Center on Frontiers of Computing Studies (CFCS) Outstanding Student

2022: Arawana Scholarship

2021-2023: Zhongying Moral Education Scholarship

2022: Turing Student Research Forum: Outstanding Presentation Award

2021: National Scholarship (Highest Honor for undergraduates in China)

2021: SenseTime Scholarship (Youngest winner, 30/year in China)

2021: Ministry of Education Top Talent Program Scholarship

|

This homepage is designed based on Jon Barron's website and deployed on Github Pages. Last updated: Aug. 29, 2023

© 2024 Haoran Geng

|